Google Cloud Professional Database Engineer Exam Guide

When I first passed this exam back in December 2023, it was widely considered one of the more approachable professional level certifications, essentially a “Cloud SQL exam.” Fast forward to now, and I can tell you that is no longer the case. The exam has matured into a broad, nuanced assessment that requires a much deeper understanding of Google’s entire database portfolio.

Regardless of your experience level as a DBA or Architect, this is no longer a certification to be taken lightly. The syllabus has evolved significantly, and I’ve put together this guide to highlight the core competencies and updated objectives you’ll need to master for a successful result.

What is the Professional Cloud Database Engineer certification?

As per the official exam page:

“A Professional Cloud Database Engineer designs, creates, manages, and troubleshoots Google Cloud databases… they translate business and technical requirements into scalable and cost-effective database solutions.”

What the Exam is NOT: It is critical to understand that this is not a SQL Engineering or Data Analysis exam.

- No Complex SQL: You aren’t expected to write complex stored procedures, multi-nested subqueries, or advanced window functions. If you know basic SELECT * FROM table WHERE condition, you have enough “language” knowledge for this test.

- No Data Science: You won’t be tested on how to join tables for a machine learning model or how to optimise a specific analytical query.

- Architecture Over Code: The focus is on Infrastructure. You are being tested on your ability to decide which database to use, how to migrate it with zero downtime, and how to secure it.

The “How” vs. The “What” Instead of asking you to write a query, the exam covers tasks like:

- Migration: “How do we move 5TB of data from an on-premises Oracle instance to AlloyDB without taking the application offline?”

- Security: “How do we ensure that only the application’s service account can access this specific Spanner instance?”

- Performance: “Why is this Bigtable cluster experiencing high latency during peak hours, and should we add more nodes or change the row key design?”

Who is this certification aimed at?

This is for Database Administrators (DBAs), Data Engineers, and Solutions Architects. If you spend your time deciding between PostgreSQL on Cloud SQL vs. AlloyDB, troubleshooting migration parallelism in DMS, tuning Spanner schemas to avoid hotspots, or configuring Bigtable app profiles to manage replication consistency and traffic routing, this is your certification.

Official Exam Domains & Weighting

To prepare effectively, you should understand how Google weights the different areas of the exam.

| Section | Weighting | Key Focus Areas |

|---|---|---|

| 1. Design innovative, scalable, and highly available cloud database solutions | ~32% | Sizing compute and storage; evaluating HA/DR tradeoffs; SQL vs NoSQL vs Vector requirements; and GenAI/LLM use cases. |

| 2. Manage a solution that can span multiple database technologies | ~25% | IAM and user access; performance monitoring and vitals; backup/recovery (RTO/RPO/PITR); and automation. |

| 3. Migrate data solutions | ~23% | Migration strategies (zero/near-zero downtime); DDL/DML conversion; and migration tool selection. |

| 4. Deploy scalable and highly available databases in Google Cloud | ~20% | Provisioning and testing HA/DR; multi-regional replication; and read replica scaling. |

Modern Database Engineering: Architecting Resilient, Global-Scale Data Platforms

Modern Database Engineering is no longer about “tending” to an instance; it’s more broad and encompasses Platform Architecture. As a Database Engineer, your scope has expanded from simple query optimisation to building globally distributed, compliant, and always-on data infrastructure that serves as the foundation for the modern enterprise.

The Google Cloud Database Portfolio

To architect these modern platforms, you must be able to navigate the entire Google Cloud data stack. While the exam touches on almost every service, success depends on mastering the “Big 4,” the heavy hitters that form the core of the certification’s technical depth.

The Big 4: Core Google Cloud Data Products of Modern Data Engineering

- Cloud SQL: The go-to for standard relational workloads (MySQL, PostgreSQL, SQL Server). For mission-critical environments, it is essential to understand the architectural benefits of the Enterprise Plus edition.

- AlloyDB: The high-performance, AI-ready PostgreSQL engine. You must understand its ScaNN index for vector search and its ability to handle HTAP (Hybrid Transactional/Analytical) workloads.

- Cloud Spanner: The “unlimited” relational database. It is the gold standard for global consistency, synchronous replication, and “Zero RPO.”

- Cloud Bigtable: The high-throughput NoSQL choice. If a requirement mentions sub-10ms latency at petabyte scale (IoT, AdTech, or FinTech), this is the architectural answer.

Remaining Data Services

While the “Big 4” handle the bulk of enterprise data, a well-rounded Database Engineer must understand when to deploy specialised services for specific use cases. These tools often serve as critical components in a multi-layered data architecture:

- Firestore: A serverless, auto-scaling NoSQL document database. It is the preferred choice for mobile and web applications that require real-time synchronisation, offline support, and a flexible schema for hierarchical data.

- Memorystore: A fully managed in-memory data store for Redis and Memcached. In a high-performance stack, Memorystore is utilised for sub-millisecond caching, session management, and reducing the read-load on your primary relational databases.

- BigQuery: Though primarily an enterprise data warehouse, the modern engineer must be able to bridge the gap between operational databases and analytics. This involves mastering BigQuery Federation to query external data in-place or utilising Datastream for Change Data Capture (CDC) to power real-time analytical dashboards.

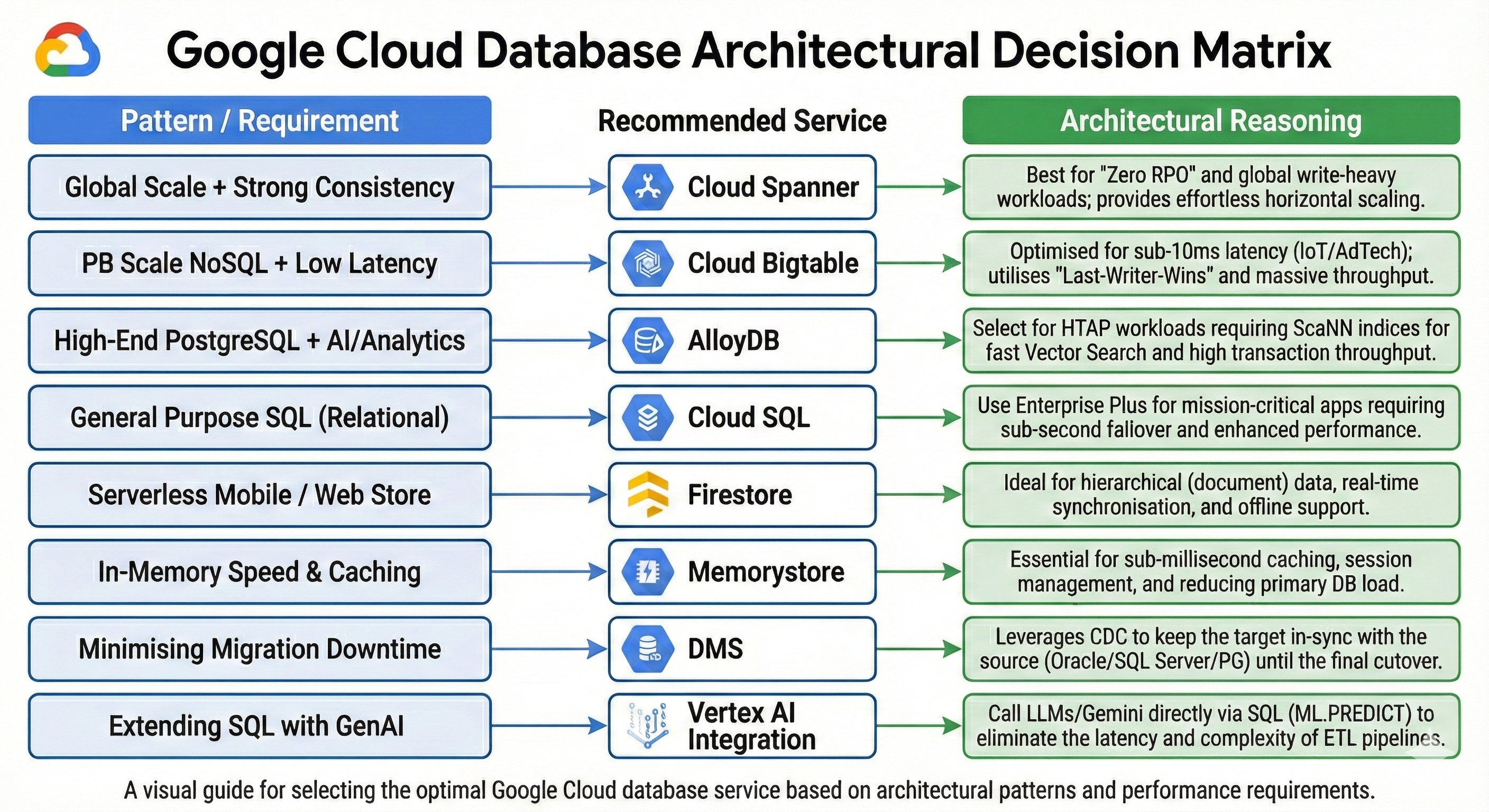

Figure 1: Architecture Pattern Database Service Matrix

Figure 1: Architecture Pattern Database Service Matrix

Domain 1. Architecting for Global Scale & High Availability

Global scale requires a deep understanding of consistency models. As a Database Engineer, you must master how Spanner uses TrueTime for external consistency across continents, but also how to leverage AlloyDB and Cloud SQL Enterprise Plus features to build resilient, multi-regional architectures.

Technical Implementation for the “Big Four”

To successfully manage these systems, you must move beyond basic provisioning and master the specific technical levers that control performance, integrity, and availability:

- Cloud Spanner (Global Relational): Managing Spanner effectively requires a deep dive into synchronous replication and External Consistency.

- Engineering Decision: To prevent “hotspots” (tablet splits) caused by sequential primary keys, you must implement advanced schema designs—specifically using UUID v4 or bit-reversed sequences.

- Durability Logic: You must understand Spanner’s synchronous replication, which ensures that a transaction is not committed until a majority of replicas agree. This provides Zero RPO at a global scale, as data is hardened across multiple zones or regions before the commit is confirmed.

- Operational Logic: While the managed service handles much of the complexity, the Engineer must identify which components autoscale natively and where manual node or processing unit adjustments are required to handle predictable traffic spikes.

- Cloud Bigtable (High-Velocity NoSQL): Designed for sub-10ms latency at petabyte scale, Bigtable requires precise configuration of its access patterns.

- Traffic Management: You must master the configuration of App Profiles to enforce intelligent traffic routing and workload isolation.

- Consistency Trade-offs: You are responsible for determining when to deploy multi-cluster instances to balance high availability (HA) against the replication lag inherent in eventual consistency models.

- AlloyDB (High-Performance PostgreSQL): This represents the evolution of relational engines for enterprise HTAP (Hybrid Transactional/Analytical Processing) workloads.

-

- Global Read Access: You must be able to configure AlloyDB secondary clusters to provide low-latency read access in distant regions.

-

- Asynchronous Nuance: Unlike Spanner, these secondary clusters rely on asynchronous replication. In an engineering context, this means you are prioritising global availability and read speed over strict global consistency, a critical trade-off when designing for “speed of light” constraints.

- Acceleration: Utilise the columnar engine to accelerate analytical queries without degrading transactional performance.

- Connectivity: Secure and optimise the multi-node architecture by implementing the AlloyDB Auth Proxy for high-performance, encrypted connections.

-

- Cloud SQL (The Enterprise Standard): The exam focuses on the technical distinctions of the Enterprise Plus tier.

- Advanced Disaster Recovery: In Enterprise Plus, you can designate a DR Replica for “Advanced DR.” This enables a zero-data-loss failover (switchover) and automatic reinstatement of the old primary as a replica.

- Business Continuity: You must be capable of engineering cross-region read replicas that meet strict RTO (Recovery Time Objective) and RPO (Recovery Point Objective) targets.

- Operational Upgrades: A key differentiator is the sub-second downtime for maintenance and scaling operations in Enterprise Plus. As an engineer, you should use this to justify “near-zero” maintenance windows for mission-critical apps.

- Storage Management: Monitor and tune Automatic Storage Increase to ensure write-heavy workloads do not encounter disk-level bottlenecks.

| Feature | Cloud Bigtable | Cloud Spanner | AlloyDB / Cloud SQL |

|---|---|---|---|

| Consistency Model | Eventual (multi-cluster) or Read-your-writes (single-cluster). | External Consistency (Strong global consistency). | Strong (Local cluster) / Eventual (Cross-region replicas). |

| Conflict Strategy | Last-Writer-Wins (LWW): Highest timestamp wins. | Synchronous Replication: Majority consensus before commit. | Primary-Led: The primary instance handles all writes; replicas follow. |

| The “Tie-Breaker” | Timestamps: (Server or client-side). | TrueTime: Atomic clock/GPS API for global ordering. | Replication Lag: The “speed of light” delay between regions. |

| Risk of Data Loss | Higher: Concurrent writes to different clusters may clobber data. | Near Zero: ACID-compliant and globally serialized. | Low (but non-zero): Potential for data loss if the primary fails before a cross-region sync. |

| Primary Use Case | High-throughput IoT, AdTech, and “Fast Data.” | Global Financial systems and ERPs (Integrity is non-negotiable). | Enterprise applications and HTAP (Analytical + Transactional) workloads. |

Domain 2. Managing Complex Migrations

Migration is now a high-stakes engineering project. The exam expects you to handle heterogeneous migrations (e.g., Oracle to AlloyDB) using Database Migration Service (DMS). This involves mastering Change Data Capture (CDC) for minimal downtime and troubleshooting parallelism to optimise throughput without crashing source systems.

To succeed, you must master the following operational pillars:

1. Hybrid Connectivity: The Transport Layer

Migration success depends on the “pipe.” Before data starts flowing, you must choose the connectivity method that balances cost, security, and performance.

- Cloud VPN: Best for smaller datasets or non-critical migrations. It is cost-effective and encrypted over the public internet but prone to the throughput limitations and “jitter” of the open web.

- Dedicated/Partner Interconnect: Ideal for large-scale Oracle or PostgreSQL migrations. It provides high throughput, low latency, and consistent performance, which is vital to avoid packet loss and latency spikes during the massive initial data load.

-

2. CDC & Lifecycle Management

You are expected to manage the end-to-end lifecycle of a migration, specifically utilising Change Data Capture (CDC) to achieve near-zero downtime. This involves:

- Pipeline Control: Knowing how to start, stop, and restart pipelines without losing data.

- Sync Logic: Maintaining the continuous stream of changes from the source to the target until the final cutover.

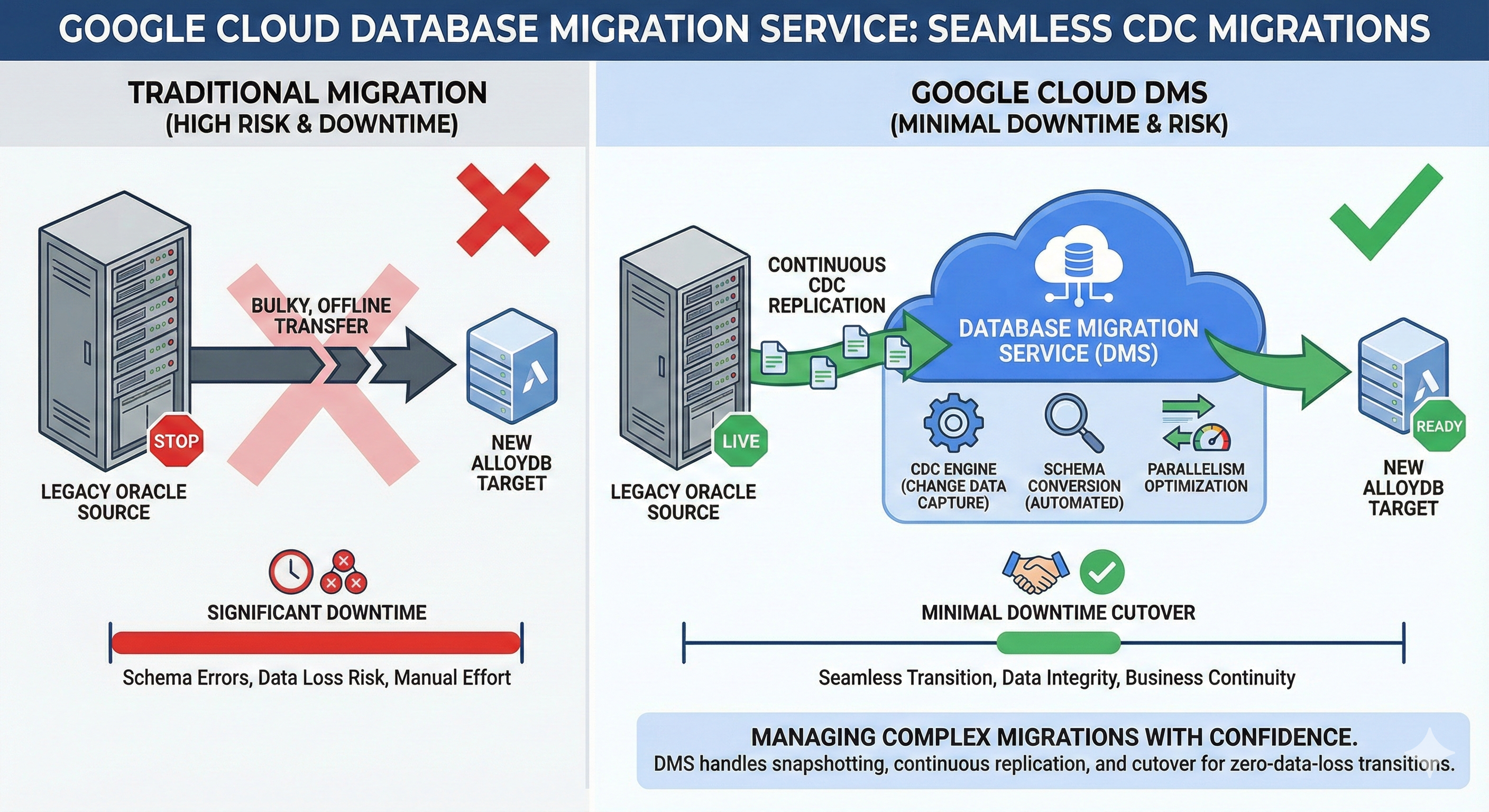

Figure 2: Database Migration Service (DMS) Change Data Capture (CDC) Database Migration

Figure 2: Database Migration Service (DMS) Change Data Capture (CDC) Database Migration

3. Parallelism & Throughput Tuning

Mastering performance is about balance. You must know how to configure maximum parallelism to:

- Optimize data transfer speeds to saturate your Interconnect/VPN capacity.

- Avoid exceeding the connection limits or CPU/IOPS capacity of your source production systems, preventing a migration from becoming a self-inflicted Denial of Service (DoS) attack.

4. Resilient Troubleshooting

The exam tests your ability to resolve failures within automated pipelines. This includes:

- Schema Parity: Ensuring data integrity across different database engines (heterogeneous).

- Conversion Breaks: Identifying where a data type conversion might fail during the initial load or the continuous replication phase.

Domain 3. Data Residency & Compliance

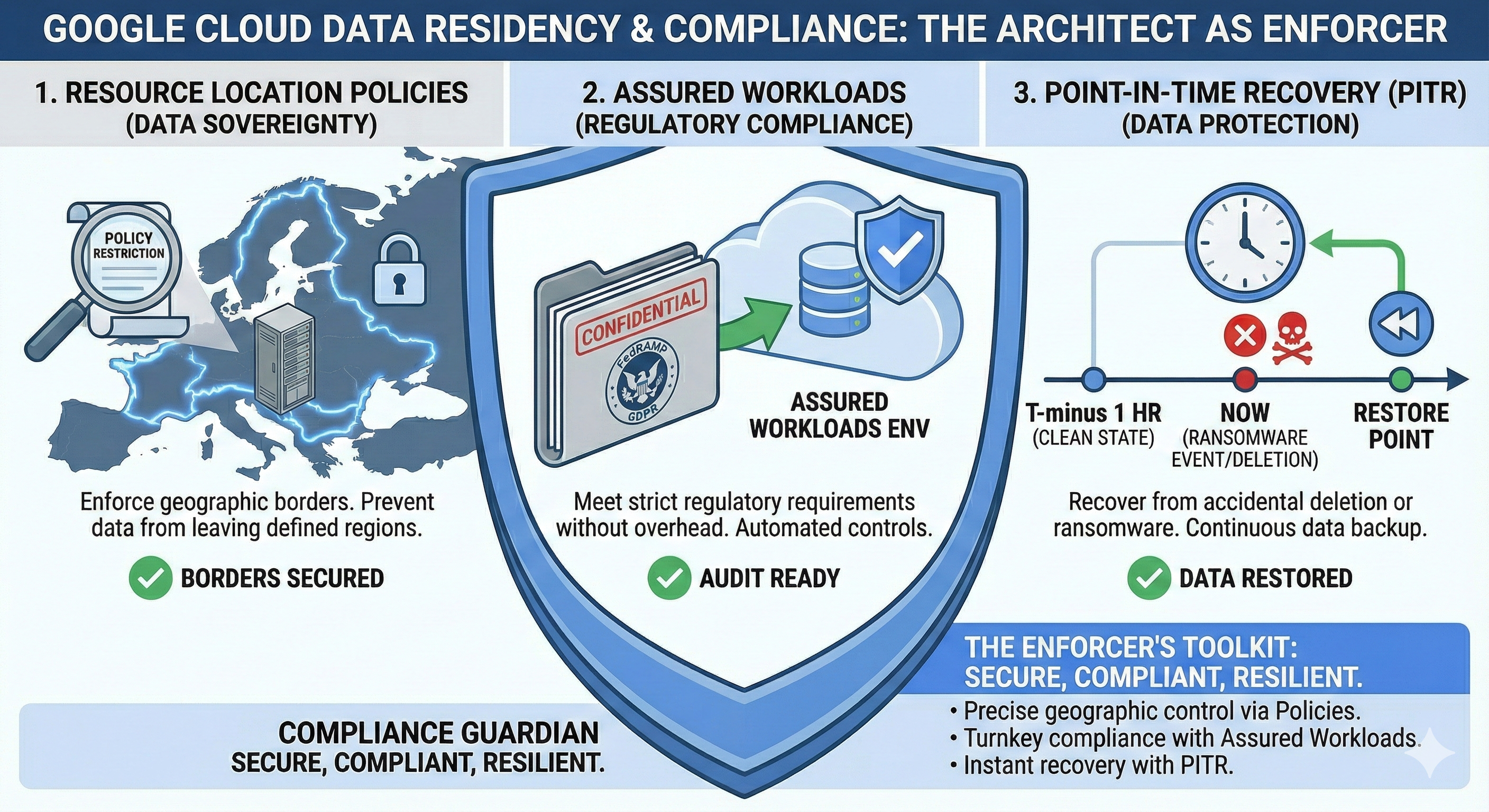

Data is highly regulated, and as the Database Engineer, you are the enforcer. The exam expects you to manage three distinct layers of compliance to ensure data integrity and legal adherence.

Layer 1: Sovereign Controls with Assured Workloads

It is no longer just about where the data sits; it is about who can touch it.

- Data Residency: Using Resource Location Policies to restrict data-at-rest to specific regions (e.g.,

europe-west2). - Personnel Access Controls: Leveraging Assured Workloads to ensure only authorised Google personnel in specific jurisdictions can provide support for your environment.

Layer 2: Resilience with Point-in-Time Recovery (PITR)

PITR is your ultimate safety net against “the human element,” ransomware or accidental DROP TABLE commands.

- Version Retention: Understanding the storage trade-offs of keeping “stale” data versions to allow for microsecond-precision recovery within a 7-day window.

Figure 3: Data Residency and Compliance Overview

Figure 3: Data Residency and Compliance Overview

Domain 4. Security at Every Layer

Security has evolved from a peripheral concern to a core architectural pillar. The exam adopts a “Zero Trust” mentality, you never implicitly trust a user or a network packet just because it’s “inside” your cloud environment.

Pillar 1: Identity as the New Perimeter

- IAM Database Auth: Moving away from static, legacy passwords to relying on Google Cloud identity and short-lived tokens.

- Cloud SQL/AlloyDB Auth Proxy: Using these tools to handle the identity chain and ephemeral tokens so applications don’t have to.

- Secret Manager: When legacy authentication is unavoidable, knowing how to tightly manage and rotate secrets programmatically.

Pillar 2: Network Isolation & Exfiltration Prevention It is not enough to simply place a database on a private IP; you must engineer the perimeter to prevent data exfiltration and ensure secure cross-project communication.

- Shared VPC Architecture: In large-scale enterprises, databases typically reside in a Service Project, while the network infrastructure is managed in a Host Project. As an engineer, you must master the IAM granularity required for this, specifically the Compute Network User role, which allows your database instances to “consume” the network resources of the host project.

- Private Service Access (PSA) vs. Private Service Connect (PSC): You must be able to choose the right connectivity model based on the organisation’s scale.

- PSA (The Peering Model): Relies on VPC Network Peering. Most legacy managed databases connect this way. It requires reserving a broad internal IP range (typically a /16) exclusively for Google services.

- PSC (The Service Endpoint Model): The modern, service-centric standard. Instead of peering entire networks, PSC creates a specific endpoint (a single internal IP) in your VPC that points to the database service.

- Engineering Guardrails (The Trade-offs):

- IP Exhaustion: PSA often leads to IP exhaustion because it “squats” on large CIDR blocks. PSC solves this by consuming only one IP per instance/endpoint.

- Transitive Routing: PSA (Peering) is non-transitive, meaning peered networks cannot reach the database. PSC bypasses this limitation, making it the “correct” answer for connecting to databases from multiple VPCs or complex hub-and-spoke topologies.

- IP Overlap: Since PSC doesn’t use peering, it allows you to connect to databases even if the consumer and producer networks have overlapping IP ranges.

- VPC Service Controls (VPC-SC): This acts as your final “bulkhead.” By defining a service perimeter, you ensure that even if a user has valid IAM credentials, they cannot move data across unauthorised boundaries (e.g., preventing a Spanner export to a public bucket outside the organisation).

Pillar 3: Data Encryption & Sovereignty (CMEK)

- Customer-Managed Encryption Keys (CMEK): Mastering Cloud KMS to manage your own keys. By controlling rotation and revocation, you maintain absolute sovereignty over your data.

- Cloud Audit Logs: Configuring Data Access logs to answer the question: “Who read this specific row of sensitive PII?”

Domain 5. Proactive Observability & Performance Tuning

In the modern stack, monitoring has evolved into Observability. We no longer just care if the database is up; we care why a specific query is slow and how it relates to the application’s user experience.

| Concept | Traditional Monitoring | Modern Observability |

|---|---|---|

| Core Question | “Is the system healthy?” (The “What”) | “Why is this happening?” (The “How”) |

| Data Scope | Predetermined metrics (CPU, Disk, RAM). | Unified Telemetry (Logs, Metrics, Traces). |

| Visibility | Known Unknowns: Alerts for failures you’ve seen. | Unknown Unknowns: Exploring novel failure modes. |

| Approach | Reactive: Threshold-based alerts (e.g., CPU > 80%). | Proactive: Using Insights to find bottlenecks. |

| Key GCP Tool | Cloud Monitoring Dashboards & Alerts. | Query Insights, Cloud Trace, Spanner Key Visualiser. |

The Diagnostic Toolkit

- Query Insights: This is the “X-ray” for Cloud SQL and AlloyDB. It allows you to see the exact query string, the user who ran it, and the “wait events” (like lock contention or I/O bottlenecks) causing the delay.

- Cloud Trace: This is the bridge between code and data. While Query Insights tells you what is slow in the database, Cloud Trace lets you see how that latency impacts the entire request life cycle—from the initial user click in the frontend to the final row fetch in the backend.

Active Monitoring vs. SLOs

You aren’t just looking at CPU metrics anymore; you are managing Service Level Objectives (SLOs). This starts by identifying Critical User Journeys (CUJs), for example, a customer completing a checkout. Once you understand the journey, you identify the Service Level Indicators (SLIs), the specific database metrics like “99th percentile query latency,” that impact that journey. From there, you set an SLO as your internal target.

| Term | What it is | Database Context Example |

|---|---|---|

| SLI (Indicator) | The specific metric used to measure performance. | “The time taken to execute a SELECT query” or “Successful vs. failed connection attempts.” |

| SLO (Objective) | The internal target for that metric over a period of time. | “99% of queries must complete in under 100ms over a rolling 30-day period.” |

| Error Budget | The “allowable” pain (100% minus the SLO). | “You have ~7 hours of ‘slow’ performance allowed per month before you must freeze new feature releases.” |

| SLA (Agreement) | The external business contract (with financial consequences). | “If the database is available less than 99.9% of the month, the provider owes a 10% credit.” |

Architect’s Logic: If your 99th percentile latency exceeds 100ms, your observability stack should tell you which specific Spanner partition is “hot” or which Bigtable app profile is experiencing replication lag before it breaches your SLO and impacts the customer.

NB: If you want to dive deeper into the world of SRE, error budgets, and reliability engineering, check out my Google Cloud Professional Cloud DevOps Engineer Exam Guide.

Domain 6. AI & Modern Trends: The “Intelligent” Database

The Professional Database Engineer exam has fully embraced the intersection of data and Generative AI. You must understand how to turn a standard database into a Vector Store to support RAG (Retrieval-Augmented Generation) workflows and how to bridge the gap between structured SQL and natural language.

Vector Databases & Embeddings

To support GenAI, you must understand Vector Embeddings: numerical representations that capture semantic meaning.

- pgvector & ScaNN: In Cloud SQL and AlloyDB, you’ll use the pgvector extension. AlloyDB stands out by using the ScaNN (Scalable Nearest Neighbors) index, which is up to 10x faster than standard PostgreSQL indexing for high-dimensional vector searches.

- Native Vector Search in Spanner: Spanner supports vector types and ANN (Approximate Nearest Neighbor) search natively, allowing semantic searches across globally distributed datasets with millisecond latency.

Model Integration & Vertex AI

Instead of moving data to the model (ETL), modern patterns bring the model to the data.

- Direct Model Calling: Call Vertex AI models (like Gemini) directly from a SQL query using ML.PREDICT in Spanner or google_ml.predict_row in AlloyDB.

- Real-time Enrichment: Use this for sentiment analysis, translations, or predictions as part of a standard INSERT or SELECT statement, eliminating complex external pipelines.

Operational AI & Gemini

AI is now a co-pilot for the database engineer/architect, assisting in the management and optimisation of the platform.

- Gemini in Databases: Leveraged for AI-assisted troubleshooting, query plan explanations, and performance optimisation.

- DMS Schema Conversions: Gemini is integrated into the Database Migration Service to assist in heterogeneous migrations, such as automatically rewriting complex Oracle PL/SQL triggers into PostgreSQL-compatible code.

- Natural Language to SQL (NL2SQL): AlloyDB AI allows conversational queries (e.g., “Top sales in London last quarter?”) to be translated into SQL automatically via Gemini.

Recommended Training Material

Don’t just watch videos; engage with the documentation and labs that focus on systemic design.

- Official Exam Guide

- Google Skills Boost: Database Engineer Path

- Spanner & Bigtable Whitepapers

- Official Practice Questions

Conclusion

Renewing this certification was a wake-up call. The exam is now a comprehensive assessment of your ability to manage the data heart of an enterprise cloud. You are expected to be part DBA, part Security Engineer, and part Systems Architect.

Good luck with your preparation!

Thanks for reading! Please feel free to share, subscribe for updates, or follow me on LinkedIn.

Other Guides: If you’re new to Google Cloud certifications, or you’re deciding what certification to do next, check out my other blog posts covering:

- Google Cloud Generative AI Leader Certification (GAIL)

- Google Cloud Digital Leader Certification (CDL)

- Google Cloud Associate Data Practitioner Certification (ADP)

- Google Cloud Professional Cloud Architect (PCA) Certification

- Google Cloud Professional Cloud Architect (PCA) Renewal Certification

- Google Cloud Professional Network Engineer Certification (PNE)

- Google Cloud Professional Cloud DevOps Engineer